This article summarises the findings of two OCLC research reports which recently documented how university research is assessed in five countries and the role research libraries play in the various schemes. Libraries’ administrative role in supplying bibliometrics is the most obvious. However, the author advocates a much more strategic role for libraries: to focus on the scholarly activity all around the library, to curate, advise on and preserve the manifold outputs of research activity.

Lorcan Dempsey wrote a blog entry a few years ago in which he stated that ‘the network reconfigures the library systems environment’ (July 2007).[1] Within OCLC Research we divide our research activity up into programmes, and the one that has recently been concerned with library roles in research assessment is ‘Research Information Management’. I like to use a variation on Lorcan’s comment here to capture what I consider to be the essence of the Research Information Management programme — ‘the research environment reconfigures the library’.

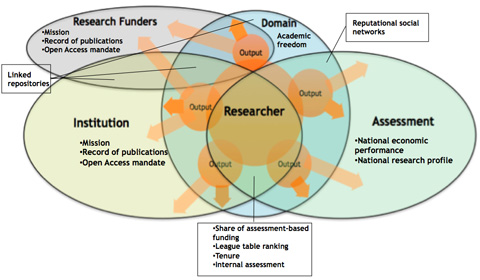

In setting up this programme we spent some time thinking about researcher environments. Our starting point was that, in order to achieve the understanding needed to provide support for research, we had to see the world with the researcher at the centre. Accordingly, we came up with this model.

What the researcher does that concerns us as librarians is to produce outputs. Researchers inhabit a number of environments, which overlap with each other in different ways according to different national cultures, disciplinary cultures and funding regimes. The environment that matters most to academics, and is closest to their interests, is that of their domain. Here the scholars can do the things that come naturally to them. This environment is the one that provides for academic freedom. In a perfect world, scholars would inhabit this domain only, and be sufficiently remunerated that all they cared about was being a scholar — doing research, breaking new ground, gaining reputation and credit in their field, and working their way up the academic career ladder through professorship and readership emeritus to the Nobel prize. But of course, life is not that simple. Researchers depend on funding in order to support their work, and so the environment created by research funders impinges upon them. Funding bodies, whether governmental or private, whether charities or commercial companies, will each have their own mission into which the researcher’s grant proposals must fit. They will often want to maintain their own records of the publications that emerge from the projects they fund, and the researcher may have to furnish details of these, or even full-text copies of their final published out-puts, into repositories or databases — perhaps with the aid of their institutional libraries. They may even have Open Access mandates now, as — for example — do the NIH in the US, and the Wellcome Trust in the UK.

Then of course the institution has a major impact on researchers. Like it or not, they depend on their institution for their monthly salary — plus a number of intellectual benefits. The institution will also have its own mission, and — increasingly — will wish to maintain its own record of publications. It may even now have an Open Access mandate.

Finally, we come to the assessment environment, which was what we studied in a project undertaken within our Research Information Management programme last year. This environment really only applies in countries in which a high proportion of the total research budget — or the HE budget from which researchers at least are funded — comes from the public purse. For our study, therefore, we chose five countries in which that was the case: the Netherlands, Denmark, the UK, Ireland and Australia. With a considerable amount of public money being spent on university research, there is a growing sense of the need to account for the expenditure, and to demonstrate the value of that research to the taxpayer. The assessment regime is therefore characterised by an emphasis upon national economic performance. Governments want evidence that their investment is helping their country, or region, to compete economically with their competitors in global markets. Related to that is the national research profile of a territory. What are its strengths, and where does it sit in the pecking order?

Thinking about how these various environments deal with researcher outputs, we can see that in the environment of the domain, social networks increasingly play a role — though not in all domains of course. A network like ‘Nature Network’[2], however, for bioscientists, is a place where researchers can come together virtually and seek to drive up their academic reputations through informal group discussion about their latest research and linking to copies of their papers and drafts. ‘Mendeley’[3] is an interesting new cross-disciplinary web-scale social tool for researchers. ‘Mendeley’ acquires data about publications in a whole range of hidden ways (largely by detecting it on the hard disks of academic subscribers in the way some streaming music services acquire their members’ music data). It is growing organically in a way that positions it interestingly against the top-down, mandate-led, frustratingly slow approach of the academy itself. For that reason, its coverage is currently very lop-sided, with biological sciences and computer and information science the most popular groupings. The social assessment value at that scale is quite attractive however (it claims 27.5 million articles at the time of writing). The most read articles are presented in a constantly changing display on the website. It is riddled with errors, but its approach is fresh and interesting. A service like ‘Mendeley’ may prove to have a gravitational force on the web that in the end universities cannot ignore — and to provide a source upon which assessment tools and services are based.

In the environments of the domain, the institution and the funder, of course, we find repositories. A repository like UK PubMedCentral[4] is a domain repository, though with a funder mandate behind it in the case of the Wellcome Trust. Not all subject, or domain repositories, also serve funder requirements. We are by now familiar with institutional repositories, since they tend to be run by libraries. In the general repository configuration overall we see a lot of replication going on within a system which might be said to lack coherence. The repositories concerned each have different degrees of gravitational pull, as you would expect. Domain repositories tend to be the most attractive of all — but that does not necessarily mean they are the best populated in all disciplines. The institutional repository is unlikely to have much affinity appeal to researchers, but if heavily policed, and if it comes with a lot of mediated labour, it may still attract more deposits than the subject repository. Looking at this generally incoherent architecture, it appears to be in need of rationalisation to promote a more efficient system overall.

In the intersection of domain, institution and assessment, various factors influence and constrain researcher behaviour. Assessment requirements are usually applied by institutions, which are exercised by the degree to which any assessment regime in force shares out the funding pot which is based upon it, if there is one. The UK has the most extreme example of this, with £1.6b per year being disbursed at present to the sector as a whole, on the basis of a formula derived from how well individual institutions performed in the last Research Assessment Exercise (or RAE). Assessment results can influence league table positions, which also matter increasingly to universities in a tough international marketplace where researchers vie for grants, and institutions vie for students and the best academics.

In countries that operate tenure, it too plays a part in constraining academic behaviour. It is applied by the insitution, but always — of course — with an eye to the metrics that will be found to judge universities against each other — even without highly visible research assessment regime results. And internal assessment goes on all the time, whether as part of tenure systems, or academic staff appraisal, or as ‘practice runs’ for national assessment exercises.

Last year we commissioned Key Perspectives to undertake our study. Their report, A Comparative Review of Research Assessment Regimes in Five Countries and the Role of Libraries in the Research Assessment Process[5] appeared in December 2009. It sought to investigate the characteristics of research assessment regimes in the five different countries and to gather key stakeholders’ views about the advantages and disadvantages of research assessment. It provided some analysis of the effect of research assessment procedures on the values of the academy. We were concerned not just to document the practices and procedures. There is an underlying issue here about the nature of scholarship which is surfaced by assessment in its various forms, on which we wanted to provide a library perspective. The report sought also to reveal the characteristics of research library involvement in research assessment support, and to discover the extent to which research assessment forms part of institutions’ strategic planning processes. Finally, it tried to draw out points of good or best practice for libraries in support of national or institutional research assessment.

In January, we followed it up with a companion report, Research assessment and the role of the library[6], which provided a summary of the key findings of the study, with some context for the recent increase in library involvement in research assessment, and recommendations for research libraries.

Research assessment serves several purposes. Reputation is critical to universities as well as to the academics who work in them, as the marketplace for higher education intensifies on a global scale. The ratings obtained for research feed in to university league tables, of which there are an increasing number. This happens both directly, in some cases, where scores from a public assessment scheme like that in the UK are factored in to formulae used to generate tables, and indirectly, by affecting the perceptions of scholars whose informed views are garnered via peer review.

Perhaps the best-known league table is the listing produced annually by the UK’s Times Higher Education magazine. International in scope, it is currently in the throes of a major overhaul to increase its validity. The magazine has realised that there is real competition emerging now between these various league tables. It is often criticised for being too ‘UK-centric’, and it will be interesting to see if that is corrected after its refreshment is complete. Its major rival, the Academic Ranking of World Universities, is produced by Shanghai Jiao Tong University. This table is better regarded in several countries, notably the US, which consider it less biased than that of the Times Higher Education. The Times Higher Education’s latest table puts four UK universities (and six US universities) in the world’s top 10, for example. The Academic Ranking of World Universities, on the other hand, puts only two UK universities in the top 10 (Cambridge and Oxford), and the other eight are all American.

Then there are a number of national rankings, generally produced by newspapers. The Complete University Guide is compiled in partnership with the UK broadsheet The Independent. RAE research assessment scores feed directly into its ranking order. These RAE scores, which used to come out in a single table, appeared at the last incarnation (in 2008) with a disciplinary breakdown. Nevertheless, some publications (such as Research Fortnight) managed to synthesize the results into derived league tables fairly quickly after they were published. Recently the Times Higher Education, seeking continually to enforce its position as the most authoritative league table compiler, began to produce a ‘table of tables’, based on three of the major UK broadsheet newspapers.

The US has also been producing league tables for many years, and the US News and World Report tables are studied anxiously by university presidents each year. The US HE system is more diversified than in most of Europe, however, so they break their rankings down into various categories — graduate schools, ‘national universities’, liberal arts colleges, etc.

The production of these league tables at regular intervals and the often impassioned responses to them by university managers, illustrate both the highly competitive nature of global higher education and the limitations that exist upon measures of complex organisations such as universities. University managers are caught up in agonies of anxiety over how their institutions will fare when the tables are published, and whether their position will suffer either through errors in the data used or through a misunderstanding by the compilers or by any of the data sources used in the compilation, over many of which they have no control. Careers can be damaged or broken by failures to meet university aspirations to improve their ranking positions, or by sudden reversals in position. Academic concerns for scientific rigour are affronted by what can seem to be an annual circus in which they have to compete — and yet the very fact of competition is what makes these tables matter, however much academics may dismiss them, and however many indignant letters are published in the press after each table’s publication, complaining about misrepresentation and containing pleas in mitigation. This is why the Times Higher Education, having published its league tables for many years with claims to authoritativeness, has recently decided it requires to revise its compilation procedures quite drastically in order to make its table more reliable, and is doing so with a great trumpeting of virtue — an irony which will not have gone unnoticed by university managers who have suffered perceived injustices at its hands over the years.

We found that research assessment in all of the countries we studied is composed of a mix of quantitative and qualitative criteria, and the mix tends to differ according to whether countries are prepared to spend a lot of money on the assessment exercise or not. On the qualitative side are peer review — generally considered the most important element in research assessment (look, for example, at the way the Times Higher Education is seeking to make it more rigorous in its ranking compilation at present), and ‘measures of esteem’ (as they are known in the RAE). These include academic prizes won, prestigious editorial positions, etc. On the quantitative side are, of course, citations and their conversion into impact factors. There are also output venue rankings — i.e. league tables of journals, and sometimes monograph and textbook publishers, where publication in these venues attracts a differentiated score which can be fed in to a formula. And finally, volume indicators — e.g. numbers of PhD or postgraduate students, amounts of external research funding won, etc.

What we found was that the more a country wishes to save on the overhead costs of an assessment regime, the more it tends towards the quantitative measures, including bibliometric measures. Thus, when the UK set out to reform its RAE system a few years ago, it wanted to drive down the cost of the exercise by introducing a large element of bibliometrics. This was opposed and to some extend discredited, however, during the pilot testing last year, and in the end we seem to be back with virtually the same system we started with, with a strong emphasis on peer review. The amount of funding at stake for institutions as a result of these exercises is a major factor. The UK system provides much more funding to the winners of the RAE than any of the other regimes we looked at, and so it would seem likely that a high pay-out system would seem worth the high cost of credibility in the eyes of those who stand to gain or lose from it. Thinking about our environments model (Figure 1), what this tells us is that the domain values are asserting themselves by choosing peer review over other criteria. In truth, though there are always complaints, it does seem to be generally accepted by the academy, whereas trying to change the balance towards a more quantitative basis was not.

Figure 1: Research environments model.

In our study we found that two of the countries surveyed took a fairly strong line on bibliometric indicators. Both Australia and Denmark are seeking to apply systems that are relatively low-cost (compared to the UK approach) and based largely on bibliometric indicators. Accordingly, the Danes have already developed — and the Australians are developing — a ranked list of publication venues (in Denmark known as the Bibliometric Research Indicator). The Australian system is limited to journals, but the Danish system includes book publishers as well. Outputs are awarded points on the basis of the ‘strength’ of the venue in which their work appears, and co-authorship leads to elaborate fractionalising of points according to a precise formula. One can see why this approach could be contentious if there were large sums of money riding on it. However, there are indications that the Australian system will eventually determine the share-out of block grant funding of research. It will be fascinating to see if the bibliometric component can remain strong in that event.

Interviews with academics in several recent studies suggest that they are frequently skeptical about the application of numerical values ascribed to publication venues by external bodies — whether based on impact factors or on peer review. On the basis of a survey of researchers’ dissemination practices undertaken last year, the Research Information Network said this about UK researchers:

‘We have already noted … the perception widespread among researchers that the RAE and the related policies of their institutions put pressure on them to publish in journals with a high impact factor rather than in other journals that would be more effective in reaching their target audience, or to use other channels altogether. Some researchers seek actively to ignore such pressures, but many view the RAE as a game they are forced to play’.[7]

In the UK, the Times Higher Education — which recently announced a partnership with Thomson Reuters over the bibliometrics it uses in its league tables — reported in June 2010 that UK researchers were now being cited almost as often as those in the US. This finding immediately drew some criticism from academics:

‘Graham Woan, reader in astrophysics at the University of Glasgow, warned that citation statistics could be skewed: “The metric used to project the raw data on to a ranking does much to define the rank order, and it is maybe only loosely coupled to the true scientific vigour and quality of a country.”’[8]

This comment captures very concisely the dilemma at the heart of assessment. How do we support and sustain the ‘true scientific vigour and quality of a country’ while yet allowing it to be measured by those who pay for it?

Scientific vigour and quality are safeguarded within their domains, and domain values are clearly suspicious of impact factors, which are manipulable and too narrowly based on citations. Citations measure popularity, but do not consider, for example, marginal areas of a discipline which may still be important, or worthy of nurturing, even if there is a relatively small number of researchers active there.

Despite this, metrics do matter. They are useful to institutions to provide snapshot research profiles of individuals, groups and whole institutions. Because they are used in the compilation of league tables and play a part — to greater and lesser degrees as we have seen — in national systems of research assessment, it makes sense for institutions to have bibliometric expertise on site, and the library is the obvious location. A Research Information Network study undertaken jointly with OCLC Research recently found that the University of Leicester has recruited a professional bibliometrician, who will be based in the library. They were adamant that they wanted a research statistician as opposed to a librarian-turned-bibliometrician.[9]

We undertook this study during 2009, when the successor scheme to the RAE, the Research Excellence Framework (REF) pilot was running, and it was not at all clear that the outcome would be a turning aside from the government’s much-publicised claim to move towards an emphasis on bibliometrics in the REF, as in fact then occurred. Had it not, we might have seen many more institutions make the same decision as Leicester. On the other hand, it does seem sensible to have such expertise in-house, ideally in the library. Though the relativities may in the end suggest that for the UK this is not such a critical skillset to have, in reality bibliometrics will continue to play a large part in assessment, and in league tables. Perhaps one of the main reasons to have a bibliometrician is not just to be able to model possible bibliometrics-based funding outcomes, but also to be able to present the institution with the drawbacks and limitations, as well as the benefits, of bibliometrics in the overall assessment mix.

The UK RAE system has worked over the last couple of decades to concentrate research funding in the hands of a top group of universities. This can be a contentious use of public money, because it creates a ‘two-tier’ élite system funded at public expense. However, if that leads to a more competitive research sector for the country as a whole, the consensus seems to be that it is worth the democratic discomfort. In our report we quote Lambert and Butler in a report from 2006 that points to this deliberate UK strategy:

‘One obvious explanation for the relatively high showing of UK universities in the league tables is that its research funding is much more heavily concentrated on the top institutions than elsewhere in Europe. Well over three-fifths of public and business investment in university research in England is directed to the top 15 universities’.[10]

The UK has in fact engineered its university research capacity, with the assistance of the RAE, to allow the country to compete better with the US, which has been the dominating force in world research and its accompanying economic benefits for some considerable time. If we use the analogy of an ‘arms race’, the US achieves its dominance not through wielding more weapons, but through wielding fewer, much more powerful ones:

‘In Europe, on paper there are 2,000 universities focusing in theory on research and competing for people and funding. By comparison, in the US there are only 215 universities offering postgraduate programs, and less than a hundred among them are officially considered “research intensive universities”.’[11]

The US system is of course very different. Some universities are considered ‘public’ (though the proportion of public funding they receive is generally much lower than would be true in Europe). Many are private. Because of this mixed economy, there is no national system of research assessment. Nevertheless, there is one major productivity driver in the US university system and that is tenure — the system that permits academic staff to reach a point in their careers where they are safe to pursue their work knowing they have a ‘job for life’ (from the point of achieving tenure scholars in principle need only be concerned with the demands and exigencies of their domain environment). The requirements for tenure vary considerably institution by institution. Many academics never achieve it. Others are fairly mature before they do. But inasmuch as tenure is a form of researcher assessment, it provides an interesting parallel to our study of research assessment regimes. Might libraries in US universities play a role in supporting the tenure and promotion system that is akin to the one we consider them playing in our publicly funded regimes in support of research assessment?

We have discussed the Danish, Australian and UK systems, and can see that the assessment regime has a strong impact on behaviours in these countries. In a sense what is happening is that the freedom of researchers to do the work they want to do is being compromised and their outputs are necessarily being skewed towards the assessment environment. In the UK that means favouring journal publication over other forms, since journal articles are more quickly produced than monographs and so can be counted more readily. In the case of Denmark and Australia, the assessment regime’s requirement to use nationally produced tables of output venue rankings inevitably skews behaviour in ways one can readily see are likely to be contentious. There is a danger that the tables ossify what should be a dynamic disciplinary literature. They also skew publishing behaviour and are biased against new journals or publishers entering the field.

When we looked at the Netherlands, however, we found a different picture, in which assessment was performed largely on an institutional basis. The absence, to date, of a government agenda to concentrate research for reasons of economic competitiveness — in the Netherlands and indeed in much of Europe — has allowed a traditional culture of university self-regulation to continue. There are signs, however, that that may be changing. Ireland as yet has no system of assessment country-wide, and so it too presents the institutional environment as the one with the most influence.

Returning to our research environments model in the context of the US tenure system, it seems clear that its key environments are those of the domain and the institution. Tenure is achieved through a form of internal peer review, which is highly concentrated in that it applies researcher by researcher. While reputation in the wider field of one’s discipline is of course critical, achieving tenure in one’s own institution is an even greater pressure — and the one feeds into the other. This is clearly brought out in the recent Faculty survey by Ithaka S+R in the US:

‘For most faculty members, our data seem to be consistent with other research indicating that faculty interest in revamping the scholarly publishing system is secondary to concern about career advancement, and that activities that will not be positively recognized in tenure and promotion processes are generally not a priority’ (Ithaka S+R Faculty survey 2009).

What is said here about the US in the context of tenure could equally be said about other countries (such as the Netherlands) with tenure systems, and indeed about the countries in our study which have abolished tenure — such as the UK. Tenure may not exist as a formal system, but the effects it creates exist nonetheless in the career-building culture of the academy. Reform of scholarly publishing, open access, the population of repositories and other such high library priorities are very much second order activities for academics.

Tenure exerts a strong pressure on the scholar as individual. The recent highly publicised murder of three professors by a lecturer denied tenure at the University of Alabama brought this comment from a senior academic:

‘As much as it may be uncomfortable for us to admit and discuss it, we in academe need to confront the psychological and physiological effects of our culture on our rising scholars. We have done a … poor job of providing the support and mentoring appropriate for such major, stressful, career make-or-break situations as dissertation defenses and tenure votes … It is time to end any tolerance for the notion that “we eat our young” and that such intellectual brutality is somehow an indicator of rigor.’[12]

Thus, while it may embody domain values, the institutional expression of those in highly competitive universities can be oppressive. Some 45% of sociologists surveyed by Ithaka think that tenure creates deleterious skew in their dissemination options.

The skew in the UK is caused by the RAE:

‘Many researchers believe the current environment puts pressure on them to publish too much, too soon, and in in-appropriate formats … researchers are increasingly aware that publications serve not only as means of communication. They can be monitored or measured as indicators of quality or impact … the perception that their work is being monitored and assessed, by the RAE in particular, has a major influence on how researchers communicate’.[7]

The suggestion that the RAE encourages ‘game-playing’ appears in several places throughout the Research Information Network report. To emphasise the parallel effect in the US created by tenure, the following quote comes from a researcher surveyed as part of a recent comprehensive study by the Center for Studies in Higher Education at the University of California Berkeley:

‘The increasing need to demonstrate progress on a second project … may encourage a certain amount of “games-playing” to the detriment of good scholarship … So that, at a place like this (not Harvard, Yale, or Princeton), they’re not asking for the second book yet for tenure in history, but they are asking more than they used to for signs of how far along are you on the second book. And frankly, I think it encourages people to fake it’.[13]

Though both systems exhibit skew, and cause unhappiness to researchers as a consequence, there are some considerable differences in the effects. One of the most significant relates to the scholarly place of the monograph. In the US, as we have seen, tenure can be held back in some institutions until researchers have completed a second book. In the UK, by contrast, humanities scholars complain that the system does not value the monograph as they do:

‘In this environment, articles in scholarly journals are increasing their dominance over all other forms of publication and dissemination … journals and the articles they contain are also the form of publication most easily measured, ranked and assessed, and thus most used in the measurement of research performance … while some researchers in the humanities and social sciences complain of inappropriate pressure to publish articles rather than books, a majority feel that monographs remain the single most important mode of dissemination, one around which they build their careers.’[7]

In the UK, the charge against the RAE is that it encourages game-playing by whole departments, or whole institutions — particularly in the area of the star researcher ‘transfer market’, but also in areas such as choice of discipline subcategory to enter. In the 2008 RAE, the Unit of Assessment Library & Information Management, for example, caused some surprise in the library community by including King’s College London (which does not have a library school, but submitted its Digital Humanities unit in this category) and excluding Strathclyde University, whose School of Information Science was merged into a new Department of Computer and Information Sciences following the previous RAE in 2001.

At the recent RLG Partnership Annual Symposium in Chicago, Adrian Johns — who gave a keynote address entitled ‘As our readers go digital’ — said in conclusion that the role of librarians in relation to academics needs to be remade in a more permeable way. He spoke of the role of ‘intelligencers’ in the days of the ‘Republic of Letters’ — people who acted as conduits for scholarly knowledge among scientists and philosophers, who advised scholars of the latest publications and findings in their field. This is a theme we are pursuing elsewhere in our Research Information Management programme, looking specifically at the role of subject librarians — or what Jim Neal of Columbia University in a panel at the ACRL conference in 2009 called ‘Subject Librarian 2.0’.[14] In thinking about assessment, it is clear that libraries have a number of roles to play in the processes that exist in the national regimes for assessment. Most obviously, insofar as these systems (and tenure and promotion systems in the US) require correct bibliographic metadata to operate, the library is the obvious place to get it.

But there is a more strategic role that we librarians can and should play here, and it is one which I would suggest has been weakened over the past two or three decades of technological progress as libraries have embraced technologies that have concentrated on data processing and so allowed them to play important roles in university administration. Where overall funding has been more constrained, their movement in this direction has been at the expense of their scholarly curation activities and they now need to reassert their role in respect of scholarly knowledge. The library should be knowledgeable about knowledge, and should be the main authority on the campus about the ways knowledge is generated and transmitted through all of the disciplines it contains. The library is the only neutral scholarly actor on the campus. Certainly it assists with administration too, and much of what we discovered in this project is that the administrative role is the main one performed by the library in relation to assessment. But there are a number of expertises that are not obviously discoverable on most university campuses today, and yet which belong in this place of ‘neutral scholarship’. They would include expertise in bibliometrics, in copyright and licensing (and thus in open access), in publishing, and in the tools of scholarly dissemination (the blogs that are most useful in particular fields, etc). And these expertises, ideally located in subject liaison librarians, collectively should be represented by the University Librarian and senior colleagues, to whom the deans and principals of our institutions of Higher Education should turn regularly for advice, and from whom they should hear regularly about the changing contours of scholarly outputs in the disciplines of their institutions.

There surely is no longer any doubt that institutional libraries must set out on a radically transforming path, scaling back on institutional-level cataloguing and acquisition, and on institutional library systems management. There are signs in some institutions that this is already happening. These tasks will be done increasingly by machinery and third parties. The institutional research library needs to focus on the unique materials it holds within its collections, and put them in the flow. And it needs to focus on the scholarly activity that goes on all around it on campus — more quickly, more vigorously and in greater volume than ever — and largely without reference to the library. Without the assistance of the library to curate, advise on and preserve the manifold outputs of this activity, while individual scholars may still manage to thrive and build their reputations, they will do so within an impoverished infrastructure for scholarship, using a compromised archive, and their legacy to future scholars will be insecure. Our greatest challenge now is to understand this, and to shape our professional structures to deal with it.

June 2010

|

Lorcan Dempsey’s weblog [http://orweblog.oclc.org/archives/001379.html]. |

|

|

Key Perspectives Ltd (2009): A Comparative Review of Research Assessment Regimes in Five Countries and the Role of Libraries in the Research Assessment Process. Report commissioned by OCLC Research. Published online at [http://www.oclc.org/research/publications/library/2009/2009-09.pdf]. |

|

|

MacColl, John (2010): Research Assessment and the Role of the Library. Report produced by OCLC Research. Published online at [http://www.oclc.org/research/publications/library/2010/2010-01.pdf]. |

|

|

Research Information Network (2009): Communicating knowledge: how and why UK researchers publish and disseminate their findings [www.rin.ac.uk/communicating-knowledge]. |

|

|

‘Britain closes science-citations gap on US’, Times Higher Education Education, 10 June 2010. |

|

|

Research Information Network (2010). Research support services in UK universities [in press]. |

|

|

Lambert, Richard and Nick Butler (2006): ‘The Future of European Universities: Renaissance or Decay?’ Centre for European Reform. |

|

|

Sironi, Andrea (2007): ‘European Universities Should Try to Conquer the World’ Università Bocconi web site. Available online at: [http://www.virtualbocconi.com/Articoli/European_Universities_Should_Try_to_Conquer_the_World/default.aspx]. |

|

|

The Chronicle of Higher Education, February 21, 2010. |

|

|

Center for Studies in Higher Education, UC Berkeley (2010): Assessing the Future Landscape of Scholarly Communication: An Exploration of Faculty Values and Needs in Seven Disciplines [http://escholarship.org/uc/item/15x7385g]. |

|

|

See blog post at [http://www.learningtimes.net/acrlconference/2009/subject-liaison-20/]. |